The AI revolution promised to transform software testing. But as organizations began deploying AI-powered solutions, they discovered an uncomfortable truth: most AI tools hallucinate, lack context, and demand blind trust. Yet Baserock is proving there's a fundamentally different approach—one where AI understands software deeply, remains under human control, and delivers credible, production-ready tests.

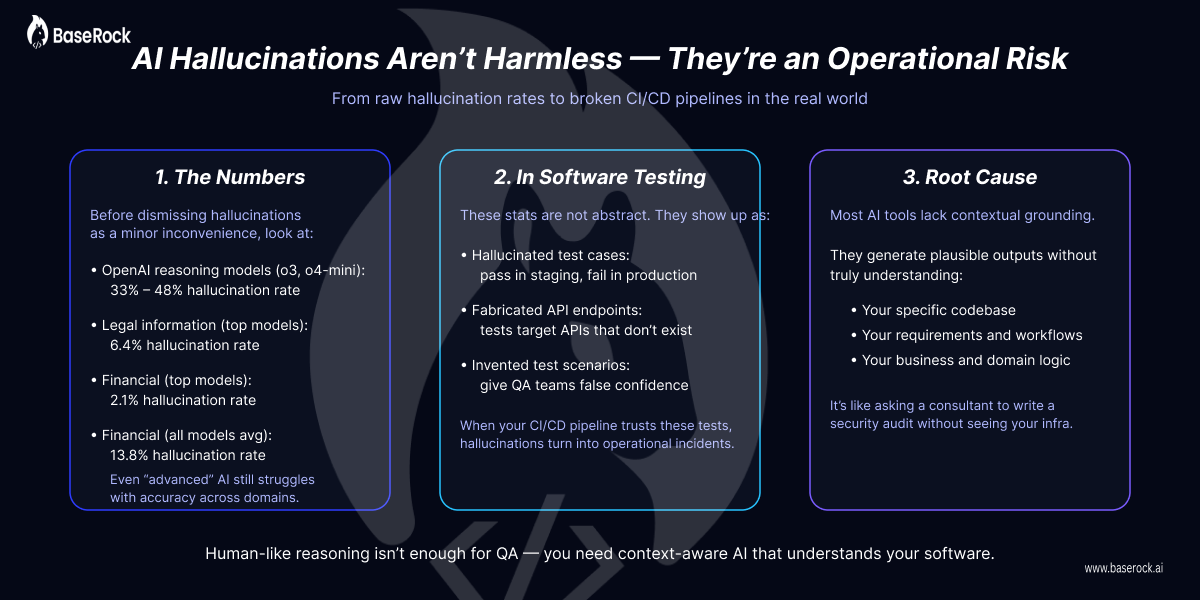

Before dismissing hallucinations as a minor inconvenience, consider the numbers. Recent research reveals that even the most advanced large language models struggle with accuracy. OpenAI's latest reasoning models (o3 and o4-mini) exhibit hallucination rates of 33% to 48% depending on the task type, a significant jump from earlier versions. Across different domains, hallucination rates vary dramatically: legal information faces a 6.4% hallucination rate even among top models, while financial data averages 2.1% for top-tier models but climbs to 13.8% across all models.

In the context of software testing, these aren't abstract statistics. A hallucinated test case that passes in staging but fails in production. A fabricated API endpoint that doesn't exist. An invented test scenario that misleads your QA team into false confidence. When your CI/CD pipeline relies on test results, hallucinations become operational liabilities.

The fundamental issue is that most AI tools lack contextual grounding. They generate plausible-sounding outputs without truly understanding your specific software, its requirements, or its business logic. It's the digital equivalent of asking a consultant to write a security audit without ever examining your infrastructure.

While hallucinations grab headlines, another crisis quietly drains testing budgets: maintenance. According to the World Quality Report 2022-2023, maintenance expenses alone consume up to 50% of total test automation budgets. When you factor in ongoing technical support (10-20% of tool license costs annually), the economics of traditional test automation become grim.

This maintenance nightmare stems from the fundamental mismatch between static test scripts and dynamic applications. As your codebase evolves—new APIs are added, business logic shifts, UI elements change—every test script requiring manual updates. Teams end up spending more time maintaining tests than creating them, a scenario repeated across thousands of organizations globally.

Here's where Baserock diverges from the typical AI wrapper. Instead of treating your software as a black box, Baserock learns it comprehensively.

Multiple sources converge into true understanding. Rather than relying on a single prompt, Baserock ingests source code, requirement documents, network traffic analysis, Postman collections, and API specifications. This multi-source learning creates a contextually rich model of how your system actually works—not how someone thinks it works. When the AI understands your API architecture, database schema, authentication flows, and business prerequisites, it stops generating hallucinations born from incomplete information.

Open-Questions bridge the final gap. After Baserock's autonomous learning phase, it generates targeted questions to its human experts—not asking for everything, but specifically asking for the domain knowledge AI cannot deduce. These questions are intelligent, focused, and minimize the friction of human involvement. Combined with Baserock's "playbook" feature (plain English descriptions of API interconnections and test prerequisites), this creates a human-in-the-loop system that actually respects human time.

One of the most underrated features of credible AI is explainability. When an AI testing tool generates a complex end-to-end test scenario, can you understand why it chose those specific steps? Most AI tools can't answer this question—they just produce results.

Baserock's AI-Workflow feature makes the reasoning transparent. Users can see exactly how natural language instructions have been converted into actionable backend steps. This isn't a black box; it's a glass box. If something looks wrong, you catch it before execution. If stakeholders question a test strategy, you have documented reasoning to reference. This aligns with broader industry demands for AI transparency and accountability—requirements that are rapidly becoming regulatory necessities across finance, healthcare, and government sectors.

The tension between cloud convenience and data security isn't theoretical—it's regulatory. GDPR, HIPAA, SOC 2, and PCI-DSS impose strict requirements on data residency and access.

Baserock offers both deployment models. Organizations can choose SaaS for simplicity or on-premises deployment for absolute control.

Furthermore, organizations retain control over LLM provider selection. Rather than being locked into a single vendor's inference layer, teams can bring their own API keys from preferred providers. This flexibility prevents vendor lock-in while maintaining sovereignty over critical testing infrastructure.

Most AI testing tools treat code changes as problems to be manually remediated. Baserock treats them as learning opportunities.

When your source code changes, Baserock observes those changes and automatically updates corresponding test cases with the user's consent. This doesn't mean blindly replacing old tests—it means intelligently evolving them. Redundant tests get consolidated. Tests affected by code changes get regenerated. New coverage gaps get identified. Users receive notifications about what changed and can verify the updates align with intent.

This is the inverse of the traditional maintenance nightmare. Instead of QA teams scrambling to update tests after each deployment, the system proactively handles the grunt work while keeping humans informed and in control. Over months and years, this compounds into extraordinary time savings—the difference between test suites that become liabilities and test suites that become assets.

Baserock's proof-of-value engagements consistently demonstrate a striking reality: test suites that required months to build via traditional automation tools are delivered in weeks. This isn't through cutting corners or reduced coverage—it's through fundamentally different methodology.

Where traditional tools require extensive framework setup, programming language expertise, and manual test crafting, Baserock combines autonomous learning from multiple sources with minimal human input. The human effort is strategically deployed: not on repetitive scripting, but on providing domain expertise and validating quality. This represents a qualitative shift in how testing gets organized.

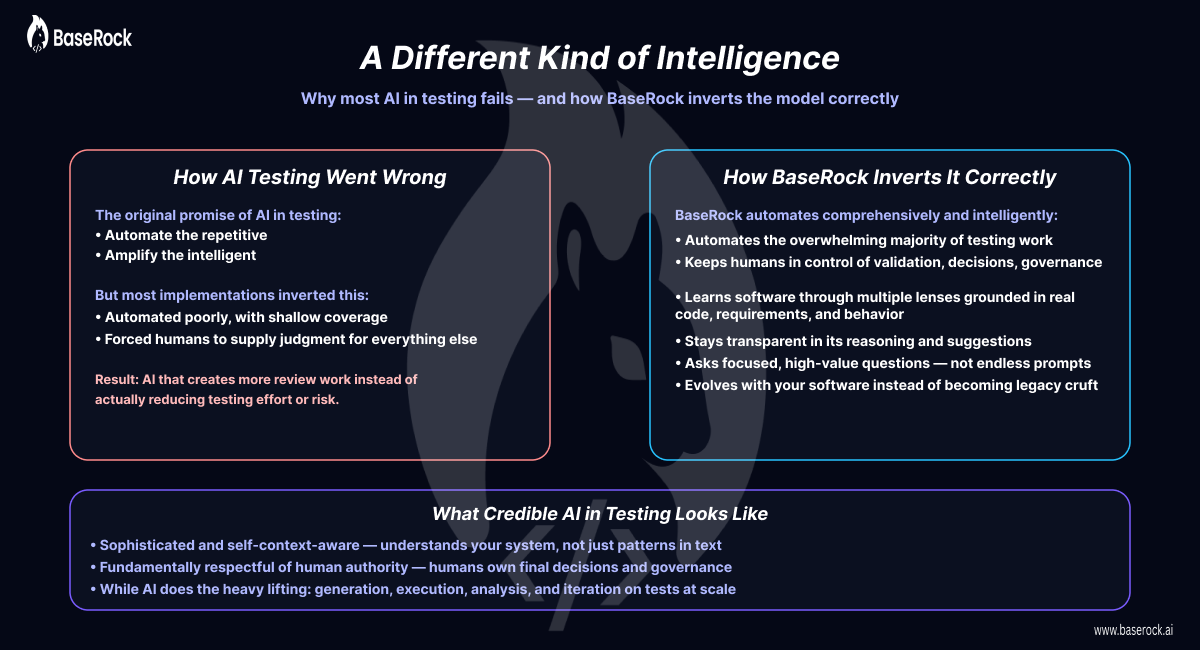

The promise of AI in testing was always compelling: automate the repetitive, amplify the intelligent. But most implementations inverted this—they automated poorly and demanded human judgment for everything else.

Baserock invert it correctly. It automates comprehensively and intelligently, while keeping humans in control of validation, decision-making, and governance. It learns software through multiple lenses, grounds knowledge in actual code and requirements rather than hallucinated assumptions, and remains transparent about its reasoning. It respects human expertise by asking focused questions rather than demanding exhaustive input. And it evolves with your software rather than becoming legacy cruft.

This is what credible AI looks like in practice: sophisticated, self-context-aware, and fundamentally respectful of human authority while doing the overwhelming majority of work.

The question isn't whether AI should transform testing—that transformation is already underway. The question is whether you'll settle for tools that hallucinate, demand black-box trust, and create new maintenance burdens, or whether you'll explore platforms built on entirely different principles.

Baserock offers proof-of-value engagements specifically designed to let you see these differences firsthand. No theoretical claims. No vague benchmarks. A real assessment of your software, genuine test generation, and a clear view of the credibility gap between typical AI tools and genuinely intelligent systems.

If you've been skeptical about AI testing due to hallucinations, lack of transparency, or privacy concerns, Baserock is worth evaluating. It represents a fundamentally different approach—one that might change your perspective on what AI can credibly accomplish in software quality engineering.

Flexible deployment - Self hosted or on BaseRock Cloud